Abstract

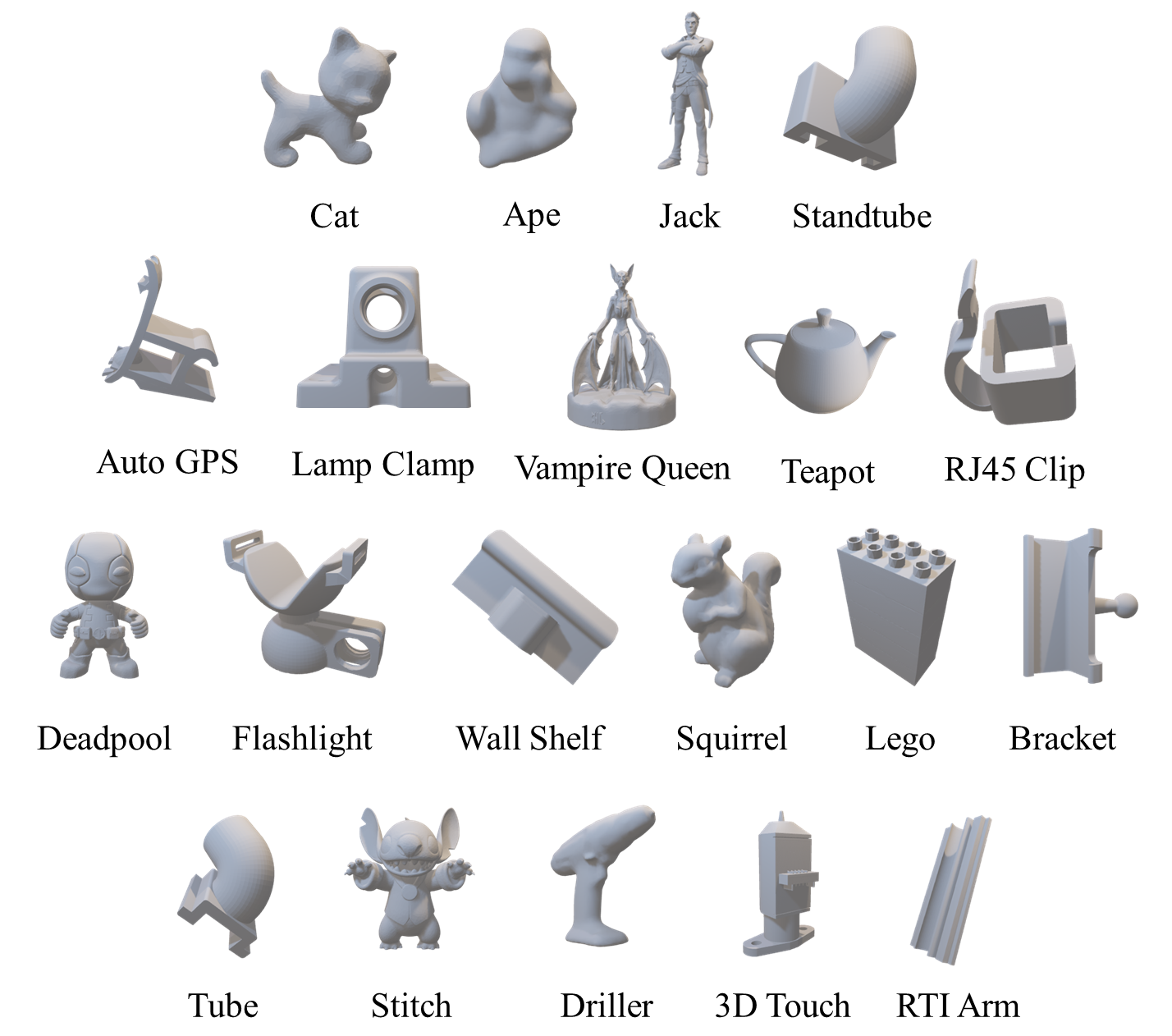

Template-based 3D object tracking still lacks a high-precision benchmark of real scenes due to the difficulty of annotating the accurate 3D poses of real moving video objects without using markers. In this paper, we present a multi-view approach to estimate the accurate 3D poses of real moving objects, and then use binocular data to construct a new benchmark for monocular textureless 3D object tracking. The proposed method requires no markers, and the cameras only need to be synchronous, relatively fixed as cross-view and calibrated. Based on our object-centered model, we jointly optimize the object pose by minimizing shape re-projection constraints in all views, which greatly improves the accuracy compared with the single-view approach, and is even more accurate than the depth-based method. Our new benchmark dataset contains 20 textureless objects, 22 scenes, 404 video sequences and 126K images captured in real scenes. The annotation error is guaranteed to be less than 2mm, according to both theoretical analysis and validation experiments. We re-evaluate the state-of-the-art 3D object tracking methods with our dataset, reporting their performance ranking in real scenes.

Download Dataset

| OneDrive | BaiduYun (Extraction Code:xgkm) |

Code

| download code |

Demo

Models

datasets

Video

Evaluation

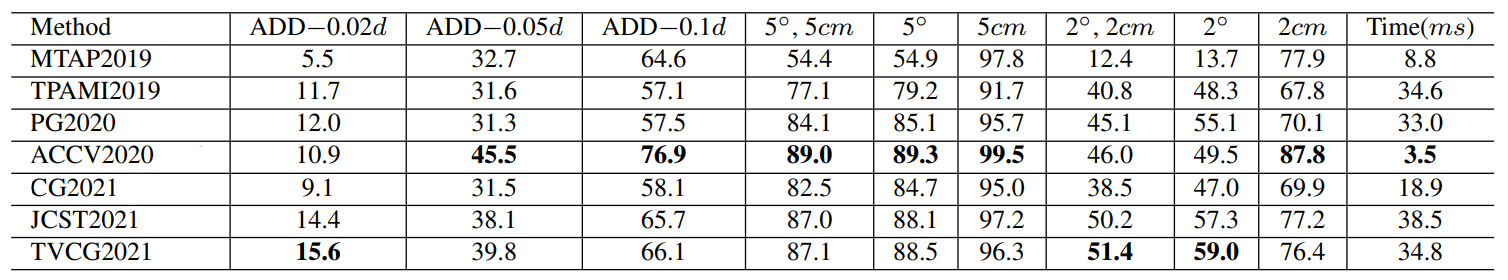

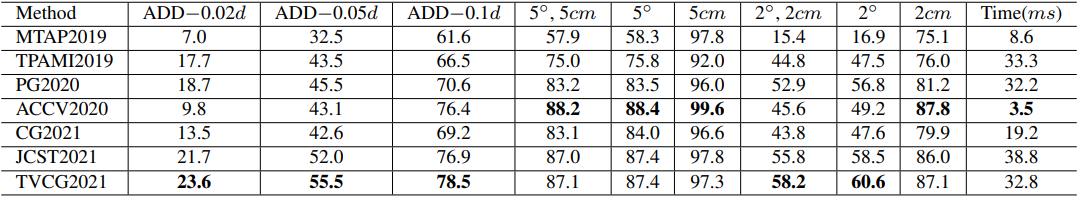

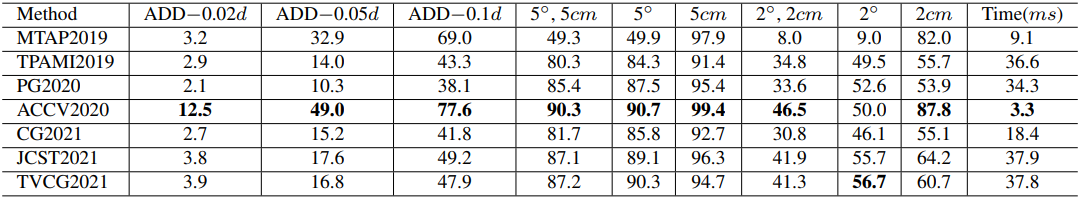

Comparison of monocular 3D tracking methods

Comparison of monocular 3D tracking methods of indoor scenes

Comparison of monocular 3D tracking methods of outdoor scenes

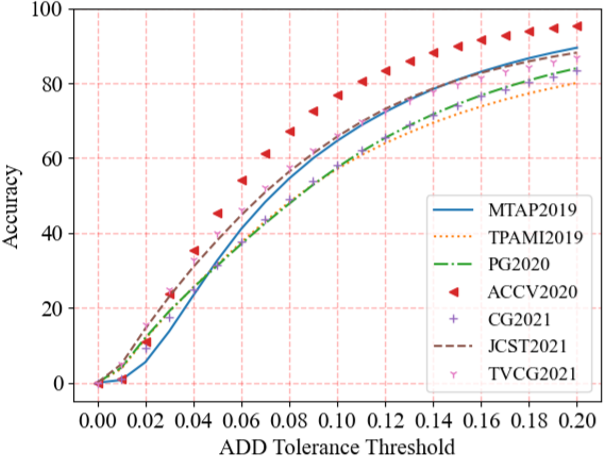

Overall tracking accuracy under various ADD error tolerance thresholds

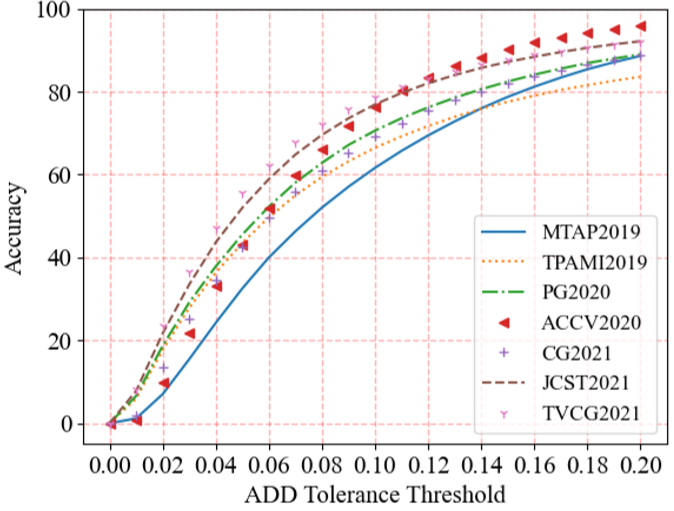

Indoor scene tracking accuracy under various ADD error tolerance thresholds

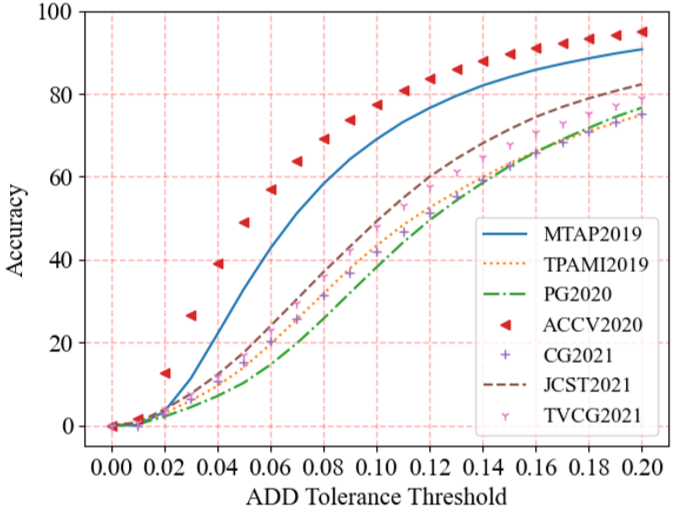

Outdoor scene tracking accuracy under various ADD error tolerance thresholds

Paper

BCOT: A Markerless High-Precision 3D Object Tracking Benchmark. Jiachen Li, Bin Wang, Shiqiang Zhu, Xin Cao, Fan Zhong, Wenxuan Chen, Te Li*, Jason Gu, Xueying Qin*. CVPR 2022.

Project Page

For more information, please visit our project page project page .